In my last post I talked about how Maven mediates conflicts in the dependency tree in probably the vast majority of all builds. This default algorithm somehow surprisingly ignores the actual version number of the declared dependencies. Instead, it uses the distance to the root of the dependency tree as a basis for the decision, which dependencies make it into the build.

Now, I'm going to cover another approach of how Maven can handle conflicts in the transitively resolved dependencies. Maven switches to this alternative algorithm, as soon as it detects a concrete version number for one of the dependencies that are involved in a conflict. In my last post I explained already, that version numbers without any decoration are considered to be a recommendation. Maven may or may not follow this recommendation. In contrast to this, if you put square brackets around the version in your dependency declaration, Maven assumes you mean it. That is, now you have defined an exact version of a given dependency. If Maven is not able to deliver the desired version(s) to your build, it will generate an appropriate error and fail.

Just as a side note: The syntax for concrete versions allows much more variants than only defining one specific version. In short, it supports the following definitions:

* [A]: Defines one specific version A

* [A,B]: Defines a range of versions from A to B (inclusive)

* [A,]: Defines a range from A (inclusive) upwards

* [,A]: Defines a range up to A (inclusive)

Instead of square brackets, you can use round brackets to define a version range exclusive the delimiting versions. For example, [A,B) defines a range from A (inclusive) up to B (exclusive).

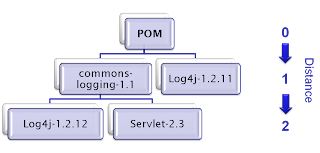

Now, let's see what happens if a concrete version pops up in the dependency tree. Honestly, I didn't find a real example, because concrete versions are used very seldom. So I constructed a hypothetical sample that is based on the ones of my last post. It assumes that someone introduced a concrete version number range in the POM of commons-logging. The range defines that commons-logging needs Log4J in a version between 1.2.12 and 1.2.16 (inclusive). Our sample project itself references this hypothetical version of commons-logging and Log4J V1.2.11.

If we execute this build, Maven decides that Log4J 1.2.16 must be included. This is in contrast to the examples in my last post, when Maven decided in all cases that Log4J V1.2.11 is the "right one" for the build. The point is, that this different behavior of Maven is not triggered by the POM of our sample project, but by the POM of commons-logging. We're not in control of this POM, someone else changed it and suddenly we end up with a different Log4J version in our build.

So why did this happen? Maven detected a conflict in the dependency tree. We requested Log4J V1.2.11, commons-logging requested a different range of Log4J versions. Now, since commons-logging used a concrete number range, the default algorithm is skipped. Instead, Maven actually looks at the values of the conflicting versions. First, it tries to find an overlapping range of all defined concrete versions. In our case this is simple, there is just one such concrete version range. Now, Maven checks if the nearest recommended version number fits into the found overlapping version range. If it does fit into the range, Maven selects the recommended version. In our case, it doesn't (V1.2.11 doesn't fit into [1.2.12,1.2.16]). If this happens, Maven selects the biggest version of the range. In our sample, this is V1.2.16.

What would happen, if the version range is [1.2.11,1.2.16]? In this case, Maven would select Log4J V1.2.11, because now the recommended version in our POM fits in the concrete range [1.2.11,1.2.16] defined in commons-logging. And what would happen, if we define a concrete version [1.2.11] in our POM and commons-logging would define [1.2.12,1.2.16]? In this case, the build will fail, because there is no overlapping version number range.

After reading all this stuff, you might come to the conclusion that all dependency versions should be defined using concrete version numbers. IMO, this is one of two valid options. The other one is to make use of the POM section dependencyManagement. Instead of defining the versions directly at the dependencies, you can define them below the element dependencyManagement in your POMs. Maven guarantees that all versions you define in this section are actually used for your build. In the example above, you get Log4J V1.2.11. The concrete version number range defined in commons-logging is ignored. The backdraft of this approach is obvious: You get your desired version, but you don't get a notification that an unresolved conflict exists in the dependency tree.

Just as a side note: The syntax for concrete versions allows much more variants than only defining one specific version. In short, it supports the following definitions:

* [A]: Defines one specific version A

* [A,B]: Defines a range of versions from A to B (inclusive)

* [A,]: Defines a range from A (inclusive) upwards

* [,A]: Defines a range up to A (inclusive)

Instead of square brackets, you can use round brackets to define a version range exclusive the delimiting versions. For example, [A,B) defines a range from A (inclusive) up to B (exclusive).

Now, let's see what happens if a concrete version pops up in the dependency tree. Honestly, I didn't find a real example, because concrete versions are used very seldom. So I constructed a hypothetical sample that is based on the ones of my last post. It assumes that someone introduced a concrete version number range in the POM of commons-logging. The range defines that commons-logging needs Log4J in a version between 1.2.12 and 1.2.16 (inclusive). Our sample project itself references this hypothetical version of commons-logging and Log4J V1.2.11.

If we execute this build, Maven decides that Log4J 1.2.16 must be included. This is in contrast to the examples in my last post, when Maven decided in all cases that Log4J V1.2.11 is the "right one" for the build. The point is, that this different behavior of Maven is not triggered by the POM of our sample project, but by the POM of commons-logging. We're not in control of this POM, someone else changed it and suddenly we end up with a different Log4J version in our build.

So why did this happen? Maven detected a conflict in the dependency tree. We requested Log4J V1.2.11, commons-logging requested a different range of Log4J versions. Now, since commons-logging used a concrete number range, the default algorithm is skipped. Instead, Maven actually looks at the values of the conflicting versions. First, it tries to find an overlapping range of all defined concrete versions. In our case this is simple, there is just one such concrete version range. Now, Maven checks if the nearest recommended version number fits into the found overlapping version range. If it does fit into the range, Maven selects the recommended version. In our case, it doesn't (V1.2.11 doesn't fit into [1.2.12,1.2.16]). If this happens, Maven selects the biggest version of the range. In our sample, this is V1.2.16.

What would happen, if the version range is [1.2.11,1.2.16]? In this case, Maven would select Log4J V1.2.11, because now the recommended version in our POM fits in the concrete range [1.2.11,1.2.16] defined in commons-logging. And what would happen, if we define a concrete version [1.2.11] in our POM and commons-logging would define [1.2.12,1.2.16]? In this case, the build will fail, because there is no overlapping version number range.

After reading all this stuff, you might come to the conclusion that all dependency versions should be defined using concrete version numbers. IMO, this is one of two valid options. The other one is to make use of the POM section dependencyManagement. Instead of defining the versions directly at the dependencies, you can define them below the element dependencyManagement in your POMs. Maven guarantees that all versions you define in this section are actually used for your build. In the example above, you get Log4J V1.2.11. The concrete version number range defined in commons-logging is ignored. The backdraft of this approach is obvious: You get your desired version, but you don't get a notification that an unresolved conflict exists in the dependency tree.