This is a blog about my life as an Enterprise Java consultant. It covers all Java and JEE technologies. But I will also share my thoughts about the processes, philosophies and people behind the technology. These topics are in most projects much more important than technology (and believe me: this is sometimes hard to realize for a nerd that started on the C64).

Thursday, December 30, 2010

Pros and cons of programming language inflation

I've read several posts and articles about this phenomenon. Sometimes it is discussed in conjunction with the term "polyglot programmer". The idea behing this term is that you as a developer should learn permanently new languages. By doing so you are supposed to somehow look behind the scenes and get a better programmer by learning from the different abstractions that are used in the different languages. This sounds not bad to me. If you are a skillful programmer you probably are able to improve your skill level this way. You probably could do so by reading books about programming concepts, too, but learning new languages is definitely more fun.

Additionally, the concepts of new languages are sometimes really able to improve the quality of our work. This happens, in my opinion, when a new language is able to transport a theoretic concept out of the computer science ivory tower to real world. In my opinion, Java did exactly this for object oriented programing (and some other concepts like garbage collection and virtual machine execution). Now, if people invent languages like Clojure, they enable programmers to easily use the concepts of functional programming in their projects. Since Clojure is based on the JVM it has a lot more chances to really succeed in this task than, for example, Lisp. Who knows, maybe in ten years from now the majority of the development community has moved on to functional languages. One possible reason for that move might be that increased parallelized hardware simply called for new programming concepts. From this point of view, the invention of new languages is a must and absolutely makes sense.

On the other hand, the inflation of programming languages is a massive problem for businesses that consider software as an investment. Even if a new language decreases the overall costs of a new software system by, lets say, 20% (which is a LOT and not realistic), the savings will be eaten up in the maintenance phase quite fast. You'll need either more people with different language skills or the same amount of people with increased skills. Both variants are more expensive that maintaining systems just in one language.

Today its easy to focus on Java or .NET or even C++ as the main programming language in a company. At least my customers try to focus their IT investments this way. The more programming languages evolve, the more difficult it is to enforce a focused technology selection. A lot of discussions pop up around the language selection process right now and these discussion will be more intense the more languages enter the market. From this point of view, language inflation is anything from positive. It makes IT projects even more complicated and risky than they are right now (risky, because you easily could choose the wrong language for your problem domain).

As a software architect I cannot simply put away the arguments of the people that are focused on the figures. Developing systems is fun, but at the end of the day they are just investments. So here's how I handle this dilemma. I agree with people that argue that a developer should learn new languages regularly. Probably not a new language a year or even more - I simply am not able to handle this. But every two to three years I try to grasp one of the popular languages and see what happens. A few years ago I did this with C# an threw it away afterwards. It simply is not relevant for my business. The last time I chose Groovy and this was certainly one of my best decisions lately. Groovy has saved me tons of times in several projects since then. Just if you are curious: I never used Groovy for a production system until now. But I use it regularly to pimp my development infrastructure. This infrastructure is only needed during the development phase, not for maintenance. If something of the Groovy stuff is really relevant for maintenance, I convert it to Java before shipping it to the customer. I do this because I believe that in maintenance phase things must be simple to be cost efficient. Two programming languages (Java and Groovy) for one system are not simple, hence I avoid this.

Now, if someone argues we should use a brand new language like Clojure for a given project, because it is cool and certainly better than good old Java, I am very cautious. Java is a suitable language for most requirements in IT business I am aware of, so introducing something new for productive use must be evaluated very carefully. Typically, this evaluation results in keeping Java. But if the arguments are valid, like, for example, the need for massive parallel execution of the internal processing, I certainly would not disallow the usage of Clojure just because it is new.

Sunday, November 21, 2010

Does technology still matter?

But, and this is a notable difference to all the conferences before, two of the keynotes had nothing to do at all with new technologies. Instead, the speakers (Nico Josuttis and Gernot Starke) talked a lot about the "soft" side of IT. Basically they asked themselves and the audience: Does technology still matter? This is surely a challenging question for a conference packed with developers. Nonetheless, both talks were excellent and made me think again about how to handle "all-new" technologies in my job.

The baseline of these thoughts is: I'm sure technology matters, but I'm not sure, how much. In large projects, the fraction of project staff that are actually IT-experts is amazingly low. You'll find a lot managers, business analysts (which are experts, too, of course, but not driven by technology) and, to be honest, a lot of developers that are, well, not experts. This is even more true if you look at projects that have entered the maintenance phase of their life cycle.

This is not a new discovery, of course. But do we really take this fact into account when designing and implementing new systems and technologies? I try to, but the problem is, I like technology. Its so much more fun using a brand-new technology for a given problem than an old, boring (but proven) one. And I know most other experts out there feel the same. That's why they talk on conferences about JDK 7 and 8, about the pros and cons of closures and, like me, the magic of maven dependency management.

Over the last couple of years and right now during the keynotes on W-JAX I got the feeling that this attitude to concentrate on technology details somehow is, yes, irrelevant. Its cool for us, the developers that spend their spare time reading blogs. But, as Nico Josuttis said in his keynote: "The war of experts in IT is lost". IT is a mainstream technology today. It's expected to be reliable, efficient, affordable, stable - but not cool. And it's expected, that all those technologies are manageable by non-experts. The people that drive the current technologies in new directions should keep this in mind. Is a discussion, what's the right way to incorporate closures in JDK 8 appropriate from this point of view? Certainly not. Is a discussion on how to consequently make Java easier to understand for non-experts (like the Groovy guys did) appropriate? In my opinion, certainly yes.

Friday, November 5, 2010

NetBeans 7.0 M2 and Maven 3 - Better than M2Eclipse?

I freshly installed NetBeans and opened my Maven project. No need for some sort of special import here. I simply choosed File | Open Project and selected the project folder containing the Maven root project. NetBeans even marks all Maven projects in the tree with a special icon.

The import finished amazingly fast. NetBeans analyzed the project structure for while after the import in a background process, but nevertheless I could immediately start to work with my modules.

After the background processes finished, some problems showed up in the project list. NetBeans marks the respective projects with warning and error icons. In the context menu of a project you can click on "Show and resolve problems ..." to open a summary of all problems.

In my case Maven could not find some dependencies in my local repo, which is OK (I did some cleaning up the days before and obviously I was little bit to rigorous). Additionally I was only kind-of online during the tests.

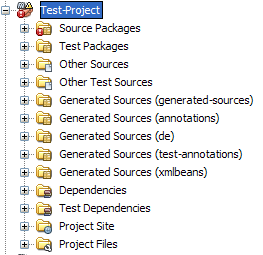

Next, I took a look at the folder structure inside one of the modules. Obviously, NetBeans knows a lot more about Maven projects than Eclipse/M2Eclipse. For example, it knows about generated-sources right out-of-the-box. Even more important, it knows that Maven maintains separate dependencies (and classpaths) for "normal" modules and for tests.

The latter one is in my eyes really important. The Eclipse builders don't distinguish between classpaths for modules and for module tests - regardless if you use Maven/M2Eclipse or not. In consequence, you will regularly run into issues where the Eclipse builder runs flawless, but the Maven build will not. This is just because some of your modules coded in Eclipse reference a dependency with test scope. Eclipse will not recognize this, but Maven will. And NetBeans, too. I like that!

Besides the more or less cosmetic features I was especially interested in how NetBeans performs compared to Eclipse/M2Eclipse. To test this I did some simple refactorings in a central module of one my biggest Maven projects. Since this module is referenced by nearly all other modules, the builder gets a lot of work. In Eclipse this means, that for all dependent modules the M2Eclipse and the internal builder is invoked. This works OK, but again, it is anything from smooth. My first tests in NetBeans have been quite encouraging. First, the refactoring worked nearly as fast as in Eclipse. Second, the build really executed in the background. That is, I was able to continue working during the build. Next, the build completed in seconds, not minutes.

After these basic tests I played with some of the other features. One thing I would like to mention is the dependency graph in NetBeans. It works similar to the one from M2Eclipse, but adds some useful features. For example, it zooms out an artifact and all direct dependents from the graph if you select it (and dims all others). It's hard to explain, but very cool and useful. Try it out yourself!

I also found some features of M2Eclipse that are not supported in NetBeans (at least I didn't find them). There is no such thing as a full-blown POM editor. NetBeans has a POM editor, but it "just" supports editing directly in the XML. The XML editor has a lot of features, like inserting new dependencies using an artifact search. This is perfect for me, since I never used the comprehensive M2Eclipse editors, but I know many people do.

Summary: I am quite impressed about how well-integrated NetBeans 7M2 and Maven 3 are. Both the functionality and especially the performance are in my opinion better than what you currently get with Eclipse and M2Eclipse. But please keep in mind that this only reflects my experiences of a first try. Certainly, if I start working with NetBeans more intense, more problems will arise. And, of course, a new release of M2Eclipse will be out soon, maybe this fixes many of the current issues. But still: I think NetBeans 7 is well worth a try for all developers that rely on Maven as a build system.

Wednesday, October 13, 2010

Maven Magic at W-JAX 2010

Sunday, October 10, 2010

Maven 3.0 final: First impressions

I was impatiently waiting for Maven 3.0 for while now, and was very happy to read that the final release is available now. I did some quick tests with the final using a pretty large Maven 2.x project. It contains of 60+ modules and uses all sorts of plugins (e.g. ant, buildnumber, xmlbeans, jaxb, assembly, weblogic, jar, ear, all sorts of reporting plugins and of course all the standard ones). After switching the build process from Maven 2.2.1 to Maven 3.0 the normal lifecycle ran without any problems. I noticed, as with the RCs before, that Maven 3 is much less noisy than 2.2.1. I like that. The build time was about the same a before (about 13 minutes on my machine). As soon as I activated the new parallel build feature (mvn -T4 clean install) the build time reduced to about 9:30min. Cool! However, Maven complains that some of the plugins I use are not yet thread safe. The build completed without error anyhow, but I probably will have to check for plugin updates during the next weeks.

I did not yet try to run the site lifecycle. The site generation in Maven 3 has been completely reworked and is not compatible with Maven 2.x. To make this work, I will have to rework my POMs and use the new versions of the site plugin.

Summary: Maven 3.0 looks good. The internals of Maven 3 have more or less been completely refactored, so the high degree of backward compatibility is quite impressive. Of course, one could argue that Maven 3 offers not enough new user-visible features to justify a new major release. But in my opinion the refactored architecture and code base is much more important than new features.

Saturday, October 2, 2010

Configuring M2Eclipse for large projects

The M2Eclipse plugin integrates Maven in the Eclipse development environment. This integration is inherently tricky, since both Eclipse JDT and Maven implement a separate build process. Basically, what most developers would like to have is the amazingly fast incremental builder of JDT combined with some of the magic of Maven (i.e., dependency management, some plugin features, building jars/wars/ears, ...).

In the default configuration, M2Eclipse integrates some steps of a typical Maven build in the Eclipse workspace build. Other steps, like the compilation of Java classes, are still executed by Eclipse itself. Details about this configuration process can be found here.

The default approach works well in my experience for small to medium-sized projects. However, once you start to extend the Maven build lifecycle with additional plugins and you have to handle large projects, the default M2Eclipse configuration considerably slows down the workspace builds in Eclipse. Personally, I had severe problems with speed and heap consumption in a project that heavily uses plugins for XMLBeans, web service and JAX-B-generation. I have bound these plugins to the Maven lifecycle phases in my POMs (for example, to the phase generate-sources). In consequence, all these plugins got executed during the Eclipse workspace build. Apparently this was the case, because the default M2Eclipse configuration decided not to exclude those plugins from an Eclipse workspace build.

This default behavior is OK for small projects. It guarantees that for example the XMLBeans plugin is executed during an Eclipse build. The classes generated by the plugin will then be available for the rest of the build. However, in a large project scenario, I prefer to manually execute the XMLBeans plugins by executing for example mvn generate-sources. If I forget this step, Eclipse will miss the generated classes and report compilation failures. But obmitting the plugin from the workspace build significantly improves performance and reduces the heap space required for Eclipse.

The following custom M2Eclipse configuration worked well for me in a Maven project with 60+ modules. I just added it to the root POM of the project. Now, M2Eclipse only manages the Eclipse build path and copies resources (I need this because of the resource filterung feature) during normal workspace builds. This is OK for most of the development work. If I want to generate sources, build jar/war/ear-files or perform some other "extraordinary" build steps, I simply start Maven manually and refresh the Eclipse workspace afterwards.

<profiles>

<profile>

<id>m2e</id>

<activation>

<property><name>m2e.version</name></property>

</activation>

<build>

<plugins>

<plugin>

<groupId>org.maven.ide.eclipse</groupId>

<artifactId>lifecycle-mapping</artifactId>

<version>0.10.0</version>

<configuration>

<mappingId>customizable</mappingId>

<configurators>

<configurator id="org.maven.ide.eclipse.jdt.javaConfigurator" />

</configurators>

<mojoExecutions>

<mojoExecution>

org.apache.maven.plugins:maven-resources-plugin::

</mojoExecution>

</mojoExecutions>

</configuration>

</plugin>

</plugins>

</build>

</profile>

</profiles>

Tuesday, September 21, 2010

OpenProj - Not bad, but ...

I am not an expert in project planning tools at all. But if a customer asks me to take the role of a technical lead or to coach another lead in a project, I will need a tool like MS Project. A lot of people take Excel instead, but over the years I got used to MS Project and now I really like it.

A technical lead typically has at least some planning tasks to fulfill. He must estimate the effort of the upcoming tasks, put them in some time line and assign the members of his team to the tasks. I am using two different kinds of tools to get this part of the job done as easily and efficient as possible: a task management system like Redmine or Jira. And a project planning tool, which up to now typically was MS Project.

In MS Project I'm performing the time and resource planning. For the actual assignment of the tasks and the tracking of the progress I'm using the task management system. One problem of this approach is, that some customer won't pay for a MS Project license (yes, I could buy one myself, of course. But often I am not even allowed to bring my own laptop). As I recently talked with a colleague about this problem, he guided me to OpenProj. OpenProj is an open source project planning tool written in Java. Its GUI and the basic feature set is very similar to MS Project.

I did some quick tests to check if the features I use most in MS Project work in OpenProj, too. The following features of OpenProj work OK for me:

- Create resources with individual calendars

- Choose individual columns for the task list

- Easily enter tasks in the Gantt-View.

- Enter estimated effort of a task in hours or days

- Assign one or multiple resources to a task by simply entering the name in the task list

- Duration of a task is calculated automatically based on the entered effort

- Dependencies to other tasks can be entered manually in the task list

- Tasks can be easily grouped (this worked only partly for me, because I didn't manage to group tasks by keyboard)

- Aggregated properties (effort, duration, ...) of a task group are calculated automatically

- Easily create milestones (by entering a duration of zero days, like in MS Project)

- Choose different planning restrictions for a task (starts at, ends at, enter a delay, etc.)

- Easily monitor the resource usage. In fact, this works even better than in MS Project, because OpenProj offers some comfortable, combined views of Gantt charts and resource usage.

- Highlight the critical path in the Gantt chart

- Export task list to excel. This basically works by Copy&Paste, but is not very comfortable. For example, you loose all task groupings when pasting the tasks in Excel.

Now for the bad news: OpenProj is not able to automatically level the resource usage (at least I have nothing found in the documentation and in the GUI about this feature). That is, if you assign multiple tasks to the same resource in the same period of time, this resource will remain over-allocated. You can easily monitor this in the resource usage view, but you have to resolve it manually.

Summary: OpenProj supports most of the basic functionality I'm personally using in a desktop planning tool. However, its lack of automatic resource leveling is a show stopper for me. I could work around this in small projects. But, honestly, in small projects I would rather use some sort of simple ToDo-List Utility like the one from AbstractSpoon Software.

Wednesday, September 15, 2010

The pitfalls of software metrics

In this post I'm going to talk about software metrics in general and specifically about the pitfalls involved with using them. What people are trying to do when using software metrics, is to capture certain aspects of a software system in an, at the first glance, easy to understand number. This is why metrics are fascinating, especially for project leads and managers. Software is kind of an "invisible product" for people that are not able to read source code. So all means to shed some light on what this expensive bunch of developers is actually doing all day are very welcome.

If you're an architect or technical lead in your project, it is part of your job to improve transparency for the management. People that give the money certainly should know what progress you are making and if the quality the produced code is OK. Metrics can help you in satisfying the demand for information and yes, control. But in my experience metrics can cause more harm to a project than they might actually help, if they are applied careless.

To prevent this, we at first need some basic understanding what software metrics actually are. Typically software metrics are divided up in three categories:

- Product metrics: Metrics of this kind are used to measure properties of the actual software system. Samples are system size, complexity, response time, error rates, etc.

- Process metrics: Process metrics tell you something about the quality of your development process. For example, you could measure how long it takes in average to fix a bug.

- Project metrics: Are used to describe the project that builds the system. Typical examples are the budget and the team size.

The LOC metric is a good candidate to explain why careless usage of software metrics can be harmful. Lets assume the management request some form of weekly or monthly reporting about the progress of the running projects. At first glance, an easy way to fulfil this request is to run some tool on the source code, calculate the LOC (and, possibly, some other metrics the tool happens to support), put everything in an excel, generate a chart and put this into the project status report. I am pretty sure people will like it. First, charts have been very welcome in nearly all status meetings I've ever been in. And secondly, the LOC metric is very easy to understand. More lines of code compared to the last meeting sounds good to anyone.

However, things will get complicated as soon as the LOC number remains constant or, even worse, shrinks from meeting to meeting. Now, don't blame the people attending the meeting for getting nervous. They asked for a progress indicator and you gave them LOC. The good thing about the LOC metric is that you can calculate it easily. The bad thing is, it does not measure the system size. It measures the number of source code lines (whatever that is, but we'll ignore this detail for now). The count of source code lines is only an indicator of how "big" a software system is. For example, a skillful developer will reuse code as often as possible. This involves reworking existing code in a way that new code can be implemented in an efficient way. At the end of the day, maybe just a few, none or even LESSER lines of code have been "produced". Nonetheless, the system size has increased. Unfortunately, only the coolest of all project leads and managers in a status meeting will believe this ...

To avoid the described scenario, we must select software metrics carefully. The selection criteria must not be that a metric is supported by a certain tool out of the box. Instead, the goals of the measurement process are relevant. In the example, the overall goal was to provide a report on the project progress. Now, we formulate questions we have to answer in order to reach this goal. Since we're talking about a software project here, a good question would be "How many use cases have already been implemented? ". This still is rather generic. So we get into more detail:

- Is the source code checked into the repository?

- Do module tests exist and are they executing without errors?

- Have the testers confirmed that the implementation is feature-complete according to the use case description?

Because of the three steps Goal, Question and Metric this approach is also known as GQM approach. In my experience it works OK. One of its main advantages is that the used metrics are well-documented - just take a look at the questions. Additionally, the GQM approach is easy to apply in practice, everybody understands it and it puts the tools at the end of the process and not at the beginning.

Tuesday, September 7, 2010

To DTO or not to DTO ...

In every single Enterprise Java project I am faced with the question: To DTO or not to DTO? A DTO (Data Transfer Object) is a potential design element to be used in the service layer of an enterprise system. DTOs have a single purpose: To transport data between the service layer and the presentation layer. If you use DTOs you have to pay for it. That is, you need mapping code that copies the data from your domain model to the DTOs and the other way round. On the other hand, you get additional functionality from the DTOs. For example, if you have to support multiple languages, measurement systems and currencies, all the transformation stuff can be encapsulated in mapping code that copies data between domain model and DTOs.

When NOT to use DTOs:

- Small to mid-sized project team. That is: At max 5 people.

- Team sits in one location.

- No separate teams for GUI and backend.

- If you don't use DTOs, the presentation and the backend will be tightly coupled (the domain model will be used directly in the presentation layer). This is OK if the project scope is limited and you know more or less what the product will look like in the end. It is definitely NOT OK if you work on some kind of strategic, mission critical application that is likely to live for 10-15 years and will be extended by multiple teams in the future.

- Highly skilled team with developers that understand the technical implications of tight coupling between backend domain model and presentation. For example, if you use some kind of OR mapping framework to persist the domain model, the team has to understand under what conditions what data is available in the presentation layer.

- Only limited I18N requirements. That is: Multiple languages are OK, multiple measurement systems, complex currency conversions and so on are not.

When to use DTOs:

- Teams with more than 5 people. Starting with this size, teams will split up and architecture needs to take this into account (just a side note: the phenomenom that the architecture of a system corresponds to the structure of the development team is called Conway´s law). If teams split up, the tight coupling between backend and presentation is inacceptable, so we need DTOs to prevent this.

- Teams that work distributed at several locations. The worst-case examples are projects that use some kind of nearshoring or offshoring models.

- The need for complex mapping functionality between domain model in the backend and the presentation layer.

- Average skilled team, you have junior developers or web-only developers in your team. The usage of DTOs allows them to use the backend services as a "black-box".

- Reduce overhead between backend and presentation. DTOs can be optimized for certain service calls. An optimized DTO contains only those attributes that are absolutely required. Popular examples are search services, that return lists of slim DTOs. In fact, performance optimization is often the one and only argument to introduce DTOs. IMO, this factor is often overemphasized. It may be very important for "real" internet applications that produce a very high load on the backend. For enterprise systems, one should carefully consider all pros and cons before introducing DTOs on a wide scale in an archictecture "just" for performance reasons. Maybe it is good enough to optimize just the top 3 search services using sepcialized DTOs and use domain objects in all over services (especially the CRUD services).

Consequences of the usage of DTOs

As I mentioned already, DTOs don´t come for free. It is crucial to document the consequences of the introduction of DTOs in the software architecture documentation. The stakeholders of the project must understand, that DTOs are a very expensive feature of a system. The most important consequences of DTOs are:

- You must maintain two separate models: a domain model in the backend that implements the business functionality and a DTO model that transports more or less the same data to the presentation layer.

- Easy to understand, but nonetheless something you should mention several times to your manager: Two models cost more than one model. Most of the additional costs arise after initial development: You must synchronize both models all the time in the maintenance phase of the system.

- Additional mapping code is necessary. You must make a well considered design decision about how the mapping logic is implemented. One extreme is the manual implementation with tons of is-not-null checks. The other extreme are mapping frameworks that promise to do all the work for you automagically. Personally, I´ve tried out both extremes and several variations inbetween and have still not found the optimal solution.